In the fast-paced world of travel, having accurate and up-to-date flight information is critical for both passengers and companies. Kayak is an excellent travel website that provides a wealth of really helpful flight information that can be utilized in a variety of ways. However, gathering this information in an efficient manner might be difficult if you do not know how.

With new technology and smart ways of analyzing data, it's easier now to get flight information from Kayak. Businesses and developers can use special tools and computer codes (like APIs) to get this info quickly. These tools help them gather details about flight schedules, prices, and availability accurately and on time.

Kayak.com is a meta-search engine for travel that allows users to explore hundreds of various booking websites for hotels, flights, holidays, and rental vehicles. Priceline.com purchased it in 2012. Kayak functions as a meta-search engine for the travel sector, carrying out the same functions as major travel platforms like Orbitz and Expedia for specific airline websites. To provide a richer user experience, they combine the aggregators and, in the meanwhile add new informational layers to the fundamentals. They handle not just airline searches, where price, length, and stopovers are usually the only factors that impact the search results, but also hotel searches, where there are other variables that impact the search results.

Kayak data scraping is like using a special tool to automatically collect information from the Kayak website. This tool goes through the website and grabs details like prices for flights, availability of hotels, or options for rental cars. People do this to gather data for comparing prices or understanding trends in the travel industry. Kayak is unique among travel applications and sites since it allows customers to compare hundreds of travel and airline websites with a single search.

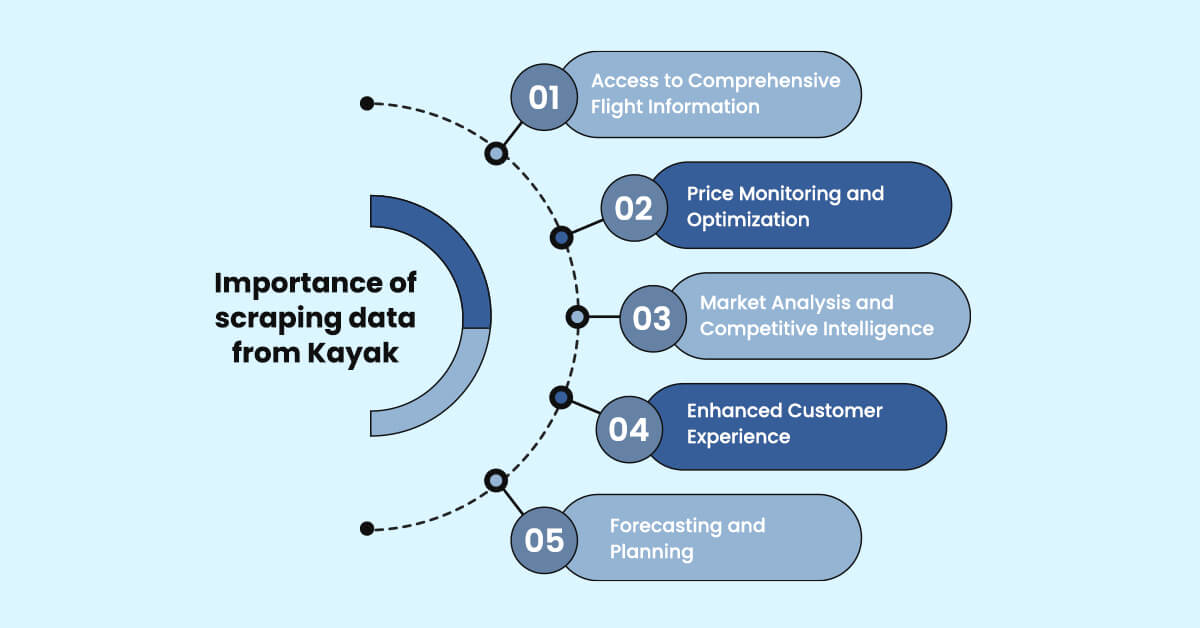

Scraping data from Kayak is critical for both travelers and travel-related companies. Here are a few reasons why scraping data from Kayak is useful:

Kayak is a giant treasure chest full of flight information. It gathers details about flights from lots of different airlines and travel agencies all in one place. So, when businesses scrape data from Kayak, they get access to this treasure trove of flight info. This helps them offer better services to travelers or helps them understand the market better if they're in the travel industry. Companies that use Kayak's broad database may provide passengers with more accurate and tailored alternatives, hence improving the overall travel experience.

Businesses often use Flight price data scraping from Kayak to check how flight prices change. Airfare prices can go up and down a lot. So, it's important for companies to keep an eye on these changes. By regularly collecting data from Kayak, businesses can quickly see when prices change. This helps them figure out the best times to offer discounts or adjust their own prices to stay competitive.

Scraping data from Kayak helps businesses know what their competitors are doing. They can see which routes are popular, what prices other companies are offering, and what deals are available. This gives them an advantage because they can make better decisions using this information.

Kayak supplies businesses with trustworthy and up-to-date flight data, allowing them to provide a seamless booking experience to their consumers. Businesses may help customers make educated selections by giving reliable information about available flights, itineraries, and rates. Transparency in flight information simplifies the booking process while fostering confidence and loyalty in customers. When customers feel empowered to make informed decisions, they are more likely to return and recommend the service to others.

When businesses scrape data from Kayak, they gain valuable information that allows them to estimate how many people will desire flights, plan how many tickets to purchase, and select where to spend money strategically. This allows them to anticipate future client preferences and adjust their strategies to fulfill those demands better.

When scraping data from Kayak, it's important to follow best practices to ensure effective and ethical data collection. Let’s understand in detail:

Before scraping data from Kayak, carefully review their terms of service to ensure compliance. Respect any restrictions or guidelines they have in place regarding automated data collection.

Implement rate limiting in your scraping scripts to avoid overwhelming Kayak's servers. Additionally, check Kayak's robots.txt file to see if there are any specific instructions for web crawlers and abide by them.

Regularly monitor Kayak's website for any updates or changes in their structure or anti-scraping measures. Be prepared to adapt your scraping techniques accordingly to maintain data collection efficiency.

Develop strategies to handle captchas and other anti-scraping measures that Kayak may implement. This could include implementing captcha-solving services or designing algorithms to bypass such obstacles.

Verify the accuracy of scraped data by cross-referencing it with multiple sources or conducting periodic checks. Address any discrepancies or errors promptly to maintain the integrity of your data.

Be mindful of users' privacy rights and avoid scraping any personal or sensitive information from Kayak's website without proper consent. Focus on collecting only the data necessary for your intended purposes.

Optimize your scraping scripts for performance and efficiency to minimize server load and processing time. This could involve techniques such as asynchronous scraping, caching, and parallel processing.

Implement strategies to handle IP blocking, such as rotating IP addresses or using proxies. By diversifying your IP addresses, you can mitigate the risk of getting blocked by Kayak's anti-scraping measures.

If you're scraping Kayak's data for commercial purposes or sharing the scraped data with others, be transparent about your data collection practices and ensure compliance with applicable laws and regulations.

Continuously review and update your scraping policies and procedures to reflect changes in Kayak's terms of service, technological advancements, and legal requirements. Stay informed about best practices in web scraping to ensure ongoing compliance and effectiveness.

A kayak platform will be used to understand the process of data scraping. After entering our search criteria and setting a few extra filters like "Nonstop," we notice that our browser's URL has been modified accordingly.

In fact, we can break down this URL into its component sections: origin, destination, start date, end date, and a suffix is something that commands Kayak to search for direct connections only and to order the results based on cost.

origin = "ZRH" destination = "MXP" startdate = "2019-09-06" enddate = "2019-09-09" url = "https://www.kayak.com/flights/" + origin + "-" + destination + "/" + startdate + "/" + enddate + "?sort=bestflight_a&fs=stops=0"

The basic concept at this point is to scrape and store the desired data (such as pricing, arrival and departure times) from the website's underlying HTML code. Our team mostly uses two packages that help us do this. Selenium is the first; it essentially manages your browser and launches the webpage on its own. The next one is called Beautiful Soup, and it assists us in transforming the disorganized HTML code to a format that is easier to read and comprehend.

First, we must install Selenium. To achieve this, we must download a driver for browsers, such as ChromeDriver, and put it in a similar folder as our code in Python (be sure it matches the version of Chrome you have installed). We now load a few of packages, instruct Selenium to utilize ChromeDriver and allow it to open our URL from above.

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

from bs4 import BeautifulSoup

import re

import pandas as pd

import numpy as np

chrome_options = webdriver.ChromeOptions()

driver = webdriver.Chrome("chromedriver.exe")

driver.implicitly_wait(20)

driver.get(url)

We are required to check out how to obtain the required data that is crucial for us after the completion of webpage loading. Using the browser's inspect capability, we can observe, for example, that the departure time of 8:55 p.m. is enclosed in a span with the class «depart-time base-time».

Now once we've sent BeautifulSoup the html code for the website, we can use it to look up the classes we're interested in. A straightforward loop can be used to check and retrieve the required results. We also need to rearrange the results into logical and usable time pairs of departure-arrival, as we receive a set of two departure timings for each search result.

soup=BeautifulSoup(driver.page_source, 'lxml')

deptimes = soup.find_all('span', attrs={'class': 'depart-time base-time'})

arrtimes = soup.find_all('span', attrs={'class': 'arrival-time base-time'})

meridies = soup.find_all('span', attrs={'class': 'time-meridiem meridiem'})

deptime = []

for div in deptimes:

deptime.append(div.getText()[:-1])

arrtime = []

for div in arrtimes:

arrtime.append(div.getText()[:-1])

meridiem = []

for div in meridies:

meridiem.append(div.getText())

deptime = np.asarray(deptime)

deptime = deptime.reshape(int(len(deptime)/2), 2)

arrtime = np.asarray(arrtime)

arrtime = arrtime.reshape(int(len(arrtime)/2), 2)

meridiem = np.asarray(meridiem)

meridiem = meridiem.reshape(int(len(meridiem)/4), 4)

We use a similar procedure for pricing. However, the price element reveals that Kayak considers it ideal to employ several classes for its pricing data. Therefore, experts need to utilize a regular expression to catch and examine all situations. We also need to take a few further steps and process to extract the pricing because it is further bundled up.

regex = re.compile('Common-Booking-MultiBookProvider (.*)multi-row Theme-featured-large(.*)')

price_list = soup.find_all('div', attrs={'class': regex})

price = []

for div in price_list:

price.append(int(div.getText().split('\n')[3][1:-1]))

Now, we will arrange everything in an appealing data frame and receive:

df = pd.DataFrame({"origin" : origin,

"destination" : destination,

"startdate" : startdate,

"enddate" : enddate,

"price": price,

"currency": "USD",

"deptime_o": [m+str(n) for m,n in zip(deptime[:,0],meridiem[:,0])],

"arrtime_d": [m+str(n) for m,n in zip(arrtime[:,0],meridiem[:,1])],

"deptime_d": [m+str(n) for m,n in zip(deptime[:,1],meridiem[:,2])],

"arrtime_o": [m+str(n) for m,n in zip(arrtime[:,1],meridiem[:,3])]

})

And that's about it. The whole data that was mixed up into the HTML code structure of our first flight data has been organized and scraped. The difficult task is finished.

Now, to add a little convenience, we may use the function we created before by passing in different combinations of the starting and destination days for our long three-day trip. The simplest technique to prevent Kayak from believing that we are a bot when we submit several queries is to alternate the browser's user agent often and wait a little period of time between requests. The complete code would then appear as follows:

# -*- using Python 3.7 -*-

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

from bs4 import BeautifulSoup

import re

import pandas as pd

import numpy as np

from datetime import date, timedelta, datetime

import time

def scrape(origin, destination, startdate, days, requests):

global results

enddate = datetime.strptime(startdate, '%Y-%m-%d').date() + timedelta(days)

enddate = enddate.strftime('%Y-%m-%d')

url = "https://www.kayak.com/flights/" + origin + "-" + destination + "/" + startdate + "/" + enddate + "?sort=bestflight_a&fs=stops=0"

print("\n" + url)

chrome_options = webdriver.ChromeOptions()

agents = ["Firefox/66.0.3","Chrome/73.0.3683.68","Edge/16.16299"]

print("User agent: " + agents[(requests%len(agents))])

chrome_options.add_argument('--user-agent=' + agents[(requests%len(agents))] + '"')

chrome_options.add_experimental_option('useAutomationExtension', False)

driver = webdriver.Chrome("chromedriver.exe", options=chrome_options, desired_capabilities=chrome_options.to_capabilities())

driver.implicitly_wait(20)

driver.get(url)

#Check if Kayak thinks that we're a bot

time.sleep(5)

soup=BeautifulSoup(driver.page_source, 'lxml')

if soup.find_all('p')[0].getText() == "Please confirm that you are a real KAYAK user.":

print("Kayak thinks I'm a bot, which I am ... so let's wait a bit and try again")

driver.close()

time.sleep(20)

return "failure"

time.sleep(20) #wait 20sec for the page to load

soup=BeautifulSoup(driver.page_source, 'lxml')

#get the arrival and departure times

deptimes = soup.find_all('span', attrs={'class': 'depart-time base-time'})

arrtimes = soup.find_all('span', attrs={'class': 'arrival-time base-time'})

meridies = soup.find_all('span', attrs={'class': 'time-meridiem meridiem'})

deptime = []

for div in deptimes:

deptime.append(div.getText()[:-1])

arrtime = []

for div in arrtimes:

arrtime.append(div.getText()[:-1])

meridiem = []

for div in meridies:

meridiem.append(div.getText())

deptime = np.asarray(deptime)

deptime = deptime.reshape(int(len(deptime)/2), 2)

arrtime = np.asarray(arrtime)

arrtime = arrtime.reshape(int(len(arrtime)/2), 2)

meridiem = np.asarray(meridiem)

meridiem = meridiem.reshape(int(len(meridiem)/4), 4)

#Get the price

regex = re.compile('Common-Booking-MultiBookProvider (.*)multi-row Theme-featured-large(.*)')

price_list = soup.find_all('div', attrs={'class': regex})

price = []

for div in price_list:

price.append(int(div.getText().split('\n')[3][1:-1]))

df = pd.DataFrame({"origin" : origin,

"destination" : destination,

"startdate" : startdate,

"enddate" : enddate,

"price": price,

"currency": "USD",

"deptime_o": [m+str(n) for m,n in zip(deptime[:,0],meridiem[:,0])],

"arrtime_d": [m+str(n) for m,n in zip(arrtime[:,0],meridiem[:,1])],

"deptime_d": [m+str(n) for m,n in zip(deptime[:,1],meridiem[:,2])],

"arrtime_o": [m+str(n) for m,n in zip(arrtime[:,1],meridiem[:,3])]

})

results = pd.concat([results, df], sort=False)

driver.close() #close the browser

time.sleep(15) #wait 15sec until the next request

return "success"

#Create an empty dataframe

results = pd.DataFrame(columns=['origin','destination','startdate','enddate','deptime_o','arrtime_d','deptime_d','arrtime_o','currency','price'])

requests = 0

destinations = ['MXP','MAD']

startdates = ['2019-09-06','2019-09-20','2019-09-27']

for destination in destinations:

for startdate in startdates:

requests = requests + 1

while scrape('ZRH', destination, startdate, 3, requests) != "success":

requests = requests + 1

#Find the minimum price for each destination-startdate-combination

results_agg = results.groupby(['destination','startdate'])['price'].min().reset_index().rename(columns={'min':'price'})

After specifying every combination and scraping the relevant data, we can use a heatmap from seaborn to neatly illustrate our results.

heatmap_results = pd.pivot_table(results_agg , values='price',

index=['destination'],

columns='startdate')

import seaborn as sns

import matplotlib.pyplot as plt

sns.set(font_scale=1.5)

plt.figure(figsize = (18,6))

sns.heatmap(heatmap_results, annot=True, annot_kws={"size": 24}, fmt='.0f', cmap="RdYlGn_r")

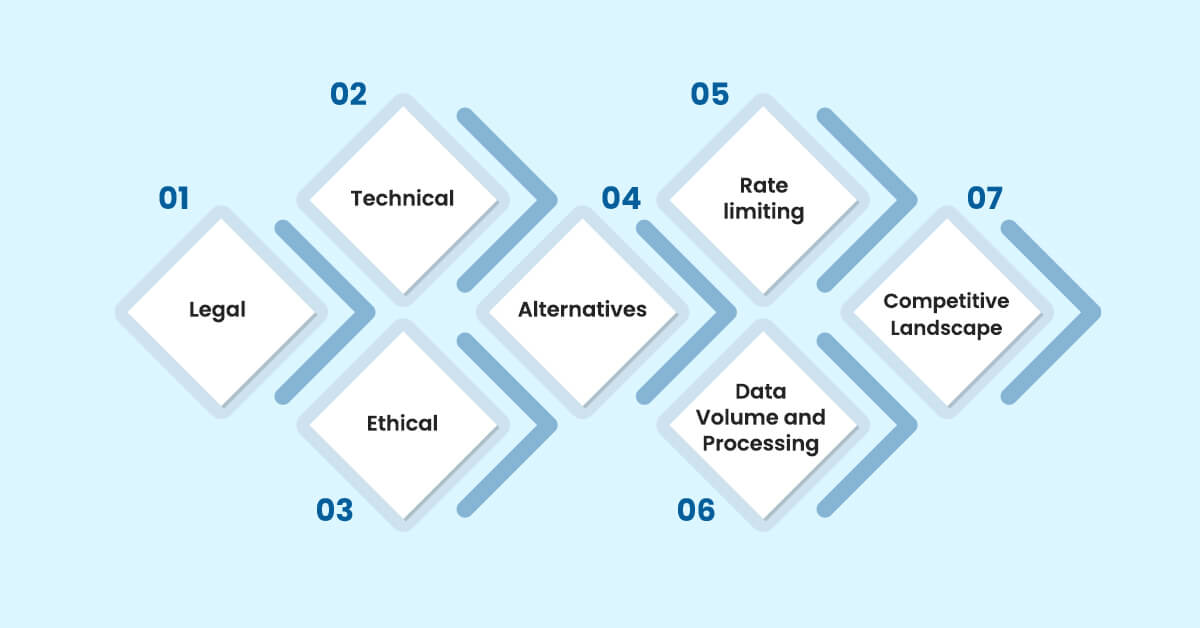

Scraping data from Kayak can be valuable, offering insights that drive business decisions and enhance customer experiences. However, alongside the benefits, there are several risks and challenges that must be carefully navigated:

Scraping data without authorization is likely a violation of Kayak's terms of service. Violation of these terms can result in legal action. Extracted data may be copyrighted, and utilizing it without permission is prohibited. Scraping at scale can overburden servers, which may constitute trespass to chattels, a legal term that protects computer systems.

Kayak is likely to use anti-scraping tactics such as CAPTCHAs, IP filtering, and dynamic content rendering, making scraping difficult. Scraped data may need to be added or corrected owing to website updates or restrictions in your scraping approach. Scraping significant volumes of data might be resource-intensive, overloading Kayak's servers and causing bottlenecks or service outages.

Scraping data for commercial reasons may provide an unfair edge over firms that use ethical techniques. Scraping data may unintentionally capture personal information, posing privacy risks. Scraping causes undue load on Kayak's resources, potentially affecting genuine users.

Look into APIs provided by travel aggregators such as Skyscanner or Amadeus for permitted data access. Use Google Flights or Momondo to compare flights without scraping. Consider obtaining data from approved data suppliers who are appropriately licensed.

When Kayak puts restrictions on how often you can access their website. This is to stop people from scraping too much data too quickly. If you scrape data too fast, Kayak might block your access for a while or even permanently.

When you scrape a lot of data from Kayak, it's like pouring a tremendous amount of information into a small funnel. It can overwhelm the systems that handle the data and need a lot of computer power and space to manage it all. This can be tough for businesses to handle efficiently.

As more and more businesses start scraping data from Kayak, it's like everyone is trying to get a piece of the same pie. Competition gets tougher. Everyone wants to be the first to find out something new or essential from the data. So, staying ahead of the game and finding unique insights becomes more problematic with more competition around.

Scraping flight data from Kayak gives a lot of beneficial information to travelers, businesses, and industry experts. To do it correctly, you need to know the rules, use the right tools, have a plan for how to scrape the data and make sure the info you get is accurate and ethical. If it is done correctly, we can use the data to make more intelligent choices and make traveling better for everyone. With the right approach and mindset, the possibilities for leveraging flight data to make better decisions and improve travel experiences are endless.